Microsoft officially updated the new DP-420 Azure Cosmos DB Developer Specialty exam on April 25, 2024. That’s why I want to share with you the reasons for how to study for the updated exam.

To study and prepare for the Microsoft DP-420 exam, this article will share some online resources (mainly Microsoft Docs and Microsoft Learn) that the exam changes as well as the latest DP-420 practice questions. You will find information on how I prepared for the Microsoft DP-420 exam in this article.

Contain:

- What is the updated Microsoft DP-420 exam like

- How to prepare for the updated Microsoft DP-420

- New resource sharing for the new Microsoft DP-420 exam

- The latest DP-420 mock practice questions

- Achieve your dreams and have a successful career

Updated Microsoft DP-420 exam

Microsoft DP-420: Designing and Implementing Cloud-Native Applications with Microsoft Azure Cosmos DB exam, and successful completion of the exam will lead to Microsoft Certified: Azure Cosmos DB Developer Specialization. The DP-420 exam is a Microsoft exam related to Azure Cosmos DB that specializes in the design, integration, optimization, and maintenance of Azure DB.

The exam will consist of 40-60 questions. The DP-420 exam has a minimum passing score of 700. And, this DP-420 exam can be taken in English, Japanese, Chinese (Simplified), Korean, German, French, Spanish, Portuguese (Brazil), Arabic (Saudi Arabia), Russian, Chinese (Traditional), Italian, and Indonesian (Indonesia). The cost of this exam is $165.

All know that it is essential to be familiar with the exam objectives and the skills to measure. Then you need to pay more attention to the updated exam objectives and skills.

Exam Objectives:

Subject matter expertise in designing, implementing, and monitoring cloud-native applications that store and manage data.

You can integrate the solution with other Azure services. You can also design, implement, and monitor solutions that take into account security, availability, resiliency, and performance requirements.

Should be proficient in developing applications that use the Azure Cosmos DB for NoSQL API. You should also be familiar with configuring and managing resources in Azure.

Skill:

- Design and implement data models (35–40%)

- Design and implement data distribution (5–10%)

- Integrate an Azure Cosmos DB solution (5–10%)

- Optimize an Azure Cosmos DB solution (15–20%)

- Maintain an Azure Cosmos DB solution (25–30%)

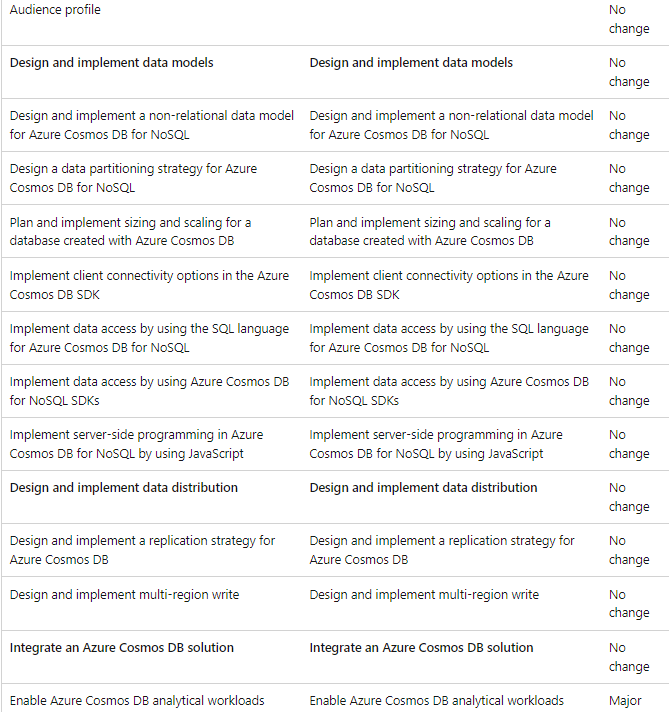

You should also pay attention to the chart below, the content of the exam changes

The picture is a comparison between the two versions of the test skills measure, and the third column depicts the extent of the change. You need to study it carefully.

The above is excerpted from: https://learn.microsoft.com/en-us/credentials/certifications/resources/study-guides/dp-420

How to prepare for the updated Azure Cosmos DB Developer Specialty DP-420

If you are sure that you want to take the updated DP-420 exam, you should do the following:

- Learn about the exam content, read and measure the exam content (objectives and measurement skills, and changes in content before and after comparisons), which you can find on Microsoft Docs and Microsoft Learn.

- Understand the exam format and question types. Microsoft doesn’t mention the specific exam format question types on the exam, but you can find them on practice tests (official or third-party).

- Take a free hands-on learning course on Microsoft Learn. It’s free!

- Hands-on experience, through practical experience of practical technology.

- Participate in courses and trainings, such as LinkedIn Learning, Pluralsight, etc

- Use books to learn and prepare, you can go to Microsoft Press to see

- Take a mock test, which is the last and most important point. It is highly recommended that you take mock exams to test your preparation with practice questions. You can find the free DP-420 practice test below in this article.

New resource sharing for the new Microsoft DP-420 exam

To cope with the updated exam, a batch of new DP-420 resources have been prepared to share with you, with links to facilitate your learning. After all, the exam has been updated, and with it, the DP-420 learning resources must also be updated to be effective.

Book:

- Designing and Implementing Cloud-native Applications Using Microsoft Azure Cosmos DB: Study Companion for the DP-420 Exam (Certification Study Companion Series) 1st ed.

- Microsoft Azure Cosmos DB Revealed: A Multi-Model Database Designed for the Cloud

- Guide to NoSQL with Azure Cosmos DB: Work with the massively scalable Azure database service with JSON, C#, LINQ, and .NET Core 2

- DP-420: Designing and Implementing Cloud-Native Applications Using Microsoft Azure Cosmos DB – Practice Tests Kindle Edition

- Designing and Implementing Cloud-Native Applications Using Microsoft Azure Cosmos DB Exam Dumps & Pocket Guide: Exam Review for Microsoft DP-420 Certification Kindle Edition

- DP-420: Designing and Implementing Cloud-Native Applications Using Microsoft Azure Cosmos DB – Practice Tests Kindle Edition

- DP-420: Designing and Implementing Cloud-Native Applications Using Microsoft Azure Cosmos DB 51 Questions: This Practice Course will help you get full … in your Microsoft DP-420 Certification exam Kindle Edition

Documentation:

- Introduction to open source database migration to Azure Cosmos DB – Training

- Microsoft Certified: Azure Cosmos DB Developer Specialty – Certifications

- Azure Cosmos DB documentation

- Analytics on Azure – Microsoft Tech Community

- Azure Data Factory – Microsoft Tech Community

- Azure – Microsoft Tech Community

- Data modeling in Azure Cosmos DB

- How to model and partition data on Azure Cosmos DB using a real-world example

- Create a synthetic partition key

- Unique key constraints in Azure Cosmos DB

- Azure Cosmos DB for the SQL Professional – Referencing Tables

- Indexing in Azure Cosmos DB – Overview

- Configure time to live in Azure Cosmos DB

- Partitioning and horizontal scaling in Azure Cosmos DB

- Create a synthetic partition key

- Getting started with SQL queries

- How to model and partition data on Azure Cosmos DB using a real-world example

- Data modeling in Azure Cosmos DB

- Partitioning and horizontal scaling in Azure Cosmos DB

- Azure Cosmos DB service quotas

- Introduction to provisioned throughput in Azure Cosmos DB

- Create Azure Cosmos containers and databases with autoscale throughput

- Plan and manage costs for Azure Cosmos DB

- Request Units in Azure Cosmos DB

- Convert the number of vCores or vCPUs in your nonrelational database to Azure Cosmos DB RU/s

- Optimize provisioned throughput cost in Azure Cosmos DB

- Provision standard (manual) throughput on a database in Azure Cosmos DB – SQL API

- Azure Cosmos DB SQL SDK connectivity modes

- Performance tips for Azure Cosmos DB and .NET

- Diagnose and troubleshoot issues when using Azure Cosmos DB .NET SDK

- Troubleshoot issues when you use Azure Cosmos DB Java SDK v4 with SQL API accounts

- Performance tips for Azure Cosmos DB and .NET SDK v2

- Tutorial: Set up Azure Cosmos DB global distribution using the SQL API

- Global data distribution with Azure Cosmos DB – under the hood

- Distribute your data globally with Azure Cosmos DB

- Install and use the Azure Cosmos DB Emulator for local development and testing

- Monitor Azure Cosmos DB data by using diagnostic settings in Azure

- Getting started with SQL queries

- Working with arrays and objects in Azure Cosmos DB

- SQL subquery examples for Azure Cosmos DB

- User-defined functions (UDFs) in Azure Cosmos DB

- System functions (Azure Cosmos DB)

- Date and time functions (Azure Cosmos DB)

- Mathematical functions (Azure Cosmos DB)

- String functions (Azure Cosmos DB)

- Parameterized queries in Azure Cosmos DB

- Azure Cosmos DB .NET SDK v3 for SQL API: Download and release notes

- Azure Cosmos DB libraries for .NET

- Tutorial: Build a .NET console app to manage data in Azure Cosmos DB SQL API account

- Use the bulk executor .NET library to perform bulk operations in Azure Cosmos DB

- Bulk import data to Azure Cosmos DB SQL API account by using the .NET SDK

- Transactions and optimistic concurrency control

- Time to Live (TTL) in Azure Cosmos DB

- Indexing in Azure Cosmos DB – Overview

- JavaScript query API in Azure Cosmos DB

- Azure Cosmos DB client library for JavaScript – Version 3.14.1

- Develop a JavaScript application for Cosmos DB with SQL API

- How to write stored procedures and triggers in Azure Cosmos DB by using the JavaScript query API

- Stored procedures, triggers, and user-defined functions

- User-defined functions (UDFs) in Azure Cosmos DB

- Distribute your data globally with Azure Cosmos DB

- How does Azure Cosmos DB provide high availability

- Global data distribution with Azure Cosmos DB – under the hood

- Configure Azure Cosmos DB account with periodic backup

- Data partitioning strategies

- Consistency levels in Azure Cosmos DB

- Partitioning and horizontal scaling in Azure Cosmos DB

- Configure multi-region writes in your applications that use Azure Cosmos DB

- Optimize multi-region cost in Azure Cosmos DB

Take the latest DP-420 practice questions online for free

Practice exams are essential for better preparation because they allow you to assess your strengths and weaknesses. You can start taking the mock test for the Pass4itSure free DP-420 exam, and doing so, will not only help you analyze or evaluate yourself, but also improve your answering skills, allowing you to save a lot of time.

| From | Questions |

| Pass4itSure | 1-15 |

Question 1:

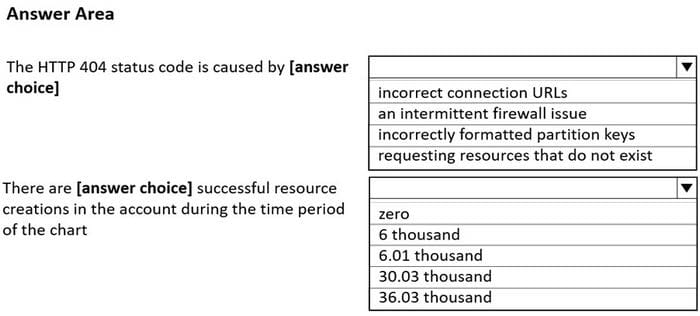

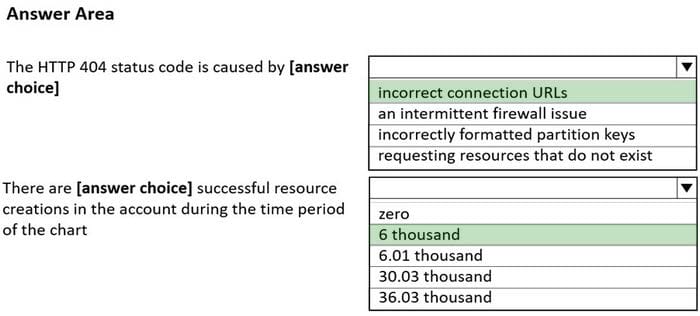

HOTSPOT

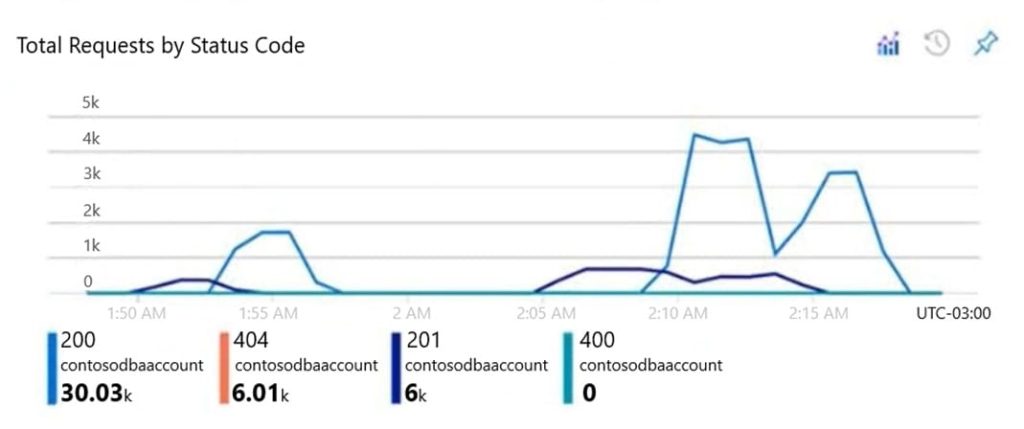

You have an Azure Cosmos DB Core (SQL) API account used by an application named App1.

You open the Insights pane for the account and see the following chart.

Use the drop-down menus to select the answer choice that answers each question based on the information presented in the graphic.

NOTE: Each correct selection is worth one point.

Hot Area:

Correct Answer:

Box 1: incorrect connection URLs

400 Bad Request: Returned when there is an error in the request URI, headers, or body. The response body will contain an error message explaining what the specific problem is.

The HyperText Transfer Protocol (HTTP) 400 Bad Request response status code indicates that the server cannot or will not process the request due to something that is perceived to be a client error (for example, malformed request syntax,

invalid request message framing, or deceptive request routing).

Box 2: 6 thousand

201 Created: Success on PUT or POST. An object created or updated successfully.

Note:

200 OK: Success on GET, PUT, or POST. Returned for a successful response.

404 Not Found: Returned when a resource does not exist on the server. If you are managing or querying an index, check the syntax and verify the index name is specified correctly.

Reference:

https://docs.microsoft.com/en-us/rest/api/searchservice/http-status-codes

Question 2:

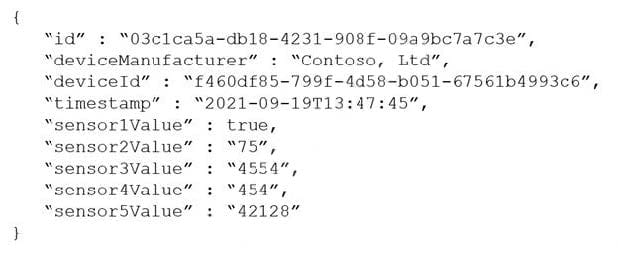

You are designing an Azure Cosmos DB Core (SQL) API solution to store data from IoT devices. Writes from the devices will occur every second. The following is a sample of the data.

You need to select a partition key that meets the following requirements for writes:

1.

Minimizes the partition skew

2.

Avoids capacity limits

3.

Avoids hot partitions What should you do?

A. Use timestamps for the partition key.

B. Create a new synthetic key that contains devices and sensor1Value.

C. Create a new synthetic key that contains devices and device manufacturers.

D. Create a new synthetic key that contains devices and a random number.

Correct Answer: D

Use a partition key with a random suffix. Distribute the workload more evenly by appending a random number at the end of the partition key value. When you distribute items in this way, you can perform parallel write operations across partitions.

Incorrect Answers:

A: You will also not like to partition the data on “DateTime”, because this will create a hot partition. Imagine you have partitioned the data on time, and then for a given minute, all the calls will hit one partition. If you need to retrieve the data for a customer, then it will be a fan-out query because data may be distributed on all the partitions.

B: Senser1Value has only two values.

C: All the devices could have the same manufacturer.

Reference: https://docs.microsoft.com/en-us/azure/cosmos-db/sql/synthetic-partition-keys

Question 3:

You have a container in an Azure Cosmos DB for a NoSQL account. You need to create an alert based on a custom Log Analytics query. Which signal type should you use?

A. Log

B. Metrics

C. Activity Log

D. Resource Health

Correct Answer: A

Explanation:

You can receive an alert based on the metrics, activity log events, or Analytics logs on your Azure Cosmos DB account:

*-> Log Analytics logs– This alert triggers when the value of a specified property in the results of a Log Analytics query crosses a threshold you assign. For example, you can write a Log Analytics query to monitor if the storage for a logical partition key is reaching the 20 GB logical partition key storage limit in Azure Cosmos DB.

Incorrect:

Metrics – The alert triggers when the value of a specified metric crosses a threshold you assign. For example, when the total request units consumed exceed 1000 RU/s. This alert is triggered both when the condition is first met and then afterwards when that condition is no longer being met.

Activity log events – This alert triggers when a certain event occurs. For example, when the keys of your Azure Cosmos DB account are accessed or refreshed.

Reference:

https://learn.microsoft.com/en-us/azure/cosmos-db/create-alerts

Question 4:

You have a container m an Azure Cosmos DB for a NoSQL account.

Data update volumes are unpredictable.

You need to process the change of the container by using a web app that has multiple instances. The change feed will be processed by using the change feed processor from the Azure Cosmos DB SDK. The multiple instances must share the workload.

Which three actions should you perform? Each correct answer presents part of the solution.

NOTE: Each correct selection is worth one point.

A. Configure the same processor name for all the instances.

B. Configure a different processor name for each instance.

C. Configure a different lease container configuration for each instance.

D. Configure the same instance name for all the instances. 13

E. Configure a different instance name for each instance.

F. Configure the same lease container configuration for all the instances.

Correct Answer: AEF

Question 5:

You have an Azure Cosmos DB for a NoSQL account named account1.

You need to create a container named Container1 in account1 by using the Azure Cosmos DB .NET SDK. The solution must ensure that the items in Container 1 never expire.

What should you set?

A. TimeToLivePropertyPath to null

B. TimeToLivePropertyPath to 0

C. DefaultTimeToLive to null

D. DefaultTimeToLive to –1

Correct Answer: D

Explanation:

Time to live for containers and items

The time to live value is set in seconds, and it is interpreted as a delta from the time that an item was last modified. You can set time to live on a container or an item within the container:

Time to Live on a container (set using DefaultTimeToLive):

If missing (or set to null), items do not expire automatically.

If present and the value is set to “-1”, it is equal to infinity, and items don\’t expire by default.

If present and the value is set to some non-zero number “n” – items will expire “n” seconds after their last modified time.

Reference:

https://learn.microsoft.com/en-us/azure/cosmos-db/nosql/time-to-live

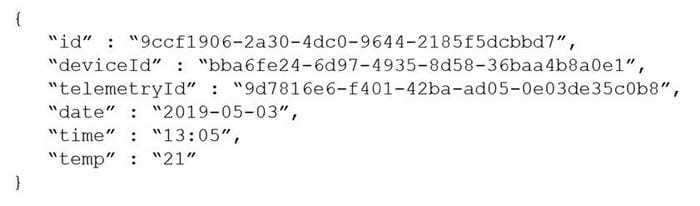

Question 6:

You have a container in an Azure Cosmos DB Core (SQL) API account. The container stores telemetry data from IoT devices. The container uses telemetry as the partition key and has a throughput of 1,000 request units per second (RU/s).

Approximately 5,000 IoT devices submit data every five minutes by using the same telemetry value.

You have an application that performs analytics on the data and frequently reads telemetry data for a single IoT device to perform trend analysis.

The following is a sample of a document in the container.

You need to reduce the amount of request units (RUs) consumed by the analytics application. What should you do?

A. Decrease the offerThroughputvalue for the container.

B. Increase the offerThroughputvalue for the container.

C. Move the data to a new container that has a partition key of deviceId.

D. Move the data to a new container that uses a partition key of date.

Correct Answer: C

The partition key is what will determine how data is routed in the various partitions by Cosmos DB and needs to make sense in the context of your specific scenario. The IoT Device ID is generally the “natural” partition key for IoT applications.

Reference: https://docs.microsoft.com/en-us/azure/architecture/solution-ideas/articles/iot-using-cosmos-db

Question 7:

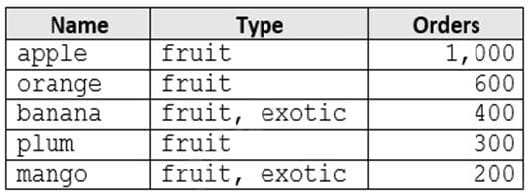

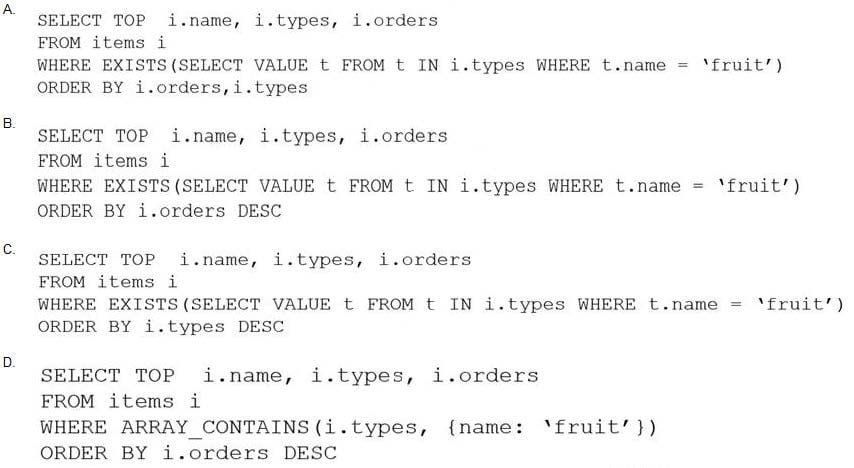

You are developing an application that will use an Azure Cosmos DB Core (SQL) API account as a data source. You need to create a report that displays the top five most ordered fruits as shown in the following table.

A collection that contains aggregated data already exists. The following is a sample document:

{

“name”: “apple”,

“type”: [“fruit”, “exotic”],

“orders”: 10000

}

Which two queries can you use to retrieve data for the report? Each correct answer presents a complete solution. NOTE: Each correct selection is worth one point.

A. Option A

B. Option B

C. Option C

D. Option D

Correct Answer: BD

ARRAY_CONTAINS returns a Boolean indicating whether the array contains the specified value. You can check for a partial or full match of an object by using a boolean expression within the command. Incorrect Answers:

A: Default sorting ordering is Ascending. Must use Descending order.

C: Order on Orders not on Type.

Reference: https://docs.microsoft.com/en-us/azure/cosmos-db/sql/sql-query-array-contains

Question 8:

You have an Azure Cosmos DB for NoSQL account named account1 that supports an application named App1. App1 uses the consistent prefix consistency level.

You configure account to use a dedicated gateway and integrated cache.

You need to ensure that App1 can use the integrated cache.

Which two actions should you perform for APP1? Each correct answer presents part of the solution.

NOTE: Each correct selection is worth one point.

A. Change the connection mode to direct

B. Change the account endpoint to https://account1.sqlx.cosmos.azure.com.

C. Change the consistency level of requests to strong.

D. Change the consistency level of session requests.

E. Change the account endpoint to https://account1.documents.azure.com

Correct Answer: BD

the Azure Cosmos DB integrated cache is an in-memory cache that is built- into the Azure Cosmos DB dedicated gateway. The dedicated gateway is a front-end computer that stores cached data and routes requests to the backend database.

You can choose from a variety of dedicated gateway sizes based on the number of cores and memory needed for your workload1. The integrated cache can reduce the RU consumption and latency of read operations by serving them from the cache instead of the backend containers2.

For your scenario, to ensure that App1 can use the integrated cache, you should perform these two actions:

Change the account endpoint to https://account1.sqlx.cosmos.azure.com. This is the dedicated gateway endpoint that you need to use to connect to your Azure Cosmos DB account and leverage the integrated cache. The standard gateway endpoint (https://account1.documents.azure.com) will not use the integrated cache2.

Change the consistency level of session requests. This is the highest consistency level that is supported by the integrated cache. If you use a higher consistency level (such as strong or bounded staleness), your requests will bypass the integrated cache and go directly to the backend containers

Question 9:

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You have an Azure Cosmos DB Core (SQL) API account named Account 1 that uses autoscale throughput.

You need to run an Azure function when the normalized request units per second for a container in account exceeds a specific value.

Solution: You configure an application to use the change feed processor to read the change feed and you configure the application to trigger the function.

Does this meet the goal?

A. Yes

B. No

Correct Answer: B

Instead, configure an Azure Monitor alert to trigger the function.

You can set up alerts from the Azure Cosmos DB pane or the Azure Monitor service in the Azure portal.

Reference:

https://docs.microsoft.com/en-us/azure/cosmos-db/create-alerts

Question 10:

You have an Azure Cosmos DB Core (SQL) API account.

You configure the diagnostic settings to send all log information to a Log Analytics workspace.

You need to identify when the provisioned request units per second (RU/s) for resources within the account were modified.

You write the following query.

AzureDiagnostics

| where Category == “ControlPlaneRequests”

What should you include in the query?

A. | where OperationName starts “AccountUpdateStart”

B. | where OperationName starts “SqlContainersDelete”

C. | where OperationName starts “MongoCollectionsThroughputUpdate”

D. | where OperationName starts “SqlContainersThroughputUpdate”

Correct Answer: A

The following are the operation names in diagnostic logs for different operations:

1.RegionAddStart, RegionAddComplete

2.RegionRemoveStart, RegionRemoveComplete

3.AccountDeleteStart, AccountDeleteComplete

4.RegionFailoverStart, RegionFailoverComplete

5.AccountCreateStart, AccountCreateComplete

6.AccountUpdateStart*, AccountUpdateComplete

7.VirtualNetworkDeleteStart, VirtualNetworkDeleteComplete

8.DiagnosticLogUpdateStart, DiagnosticLogUpdateComplete

Reference: https://docs.microsoft.com/en-us/azure/cosmos-db/audit-control-plane-logs

Question 11:

You need to provide a solution for the Azure Functions notifications following updates to the con-product. The solution must meet the business requirements and the product catalog requirements. Which two actions should you perform? Each correct answer presents part of the solution. NOTE: Each correct selection is worth one point.

A. Configure the trigger for each function to use a different leaseCollectionPrefix

B. Configure the trigger for each function to use the same leaseCollectionName

C. Configure the trigger for each function to use a different leaseCollectionName

D. Configure the trigger for each function to use the same leaseCollectionPrefix

Correct Answer: AB

leaseCollectionPrefix: when set, the value is added as a prefix to the leases created in the Lease collection for this Function. Using a prefix allows two separate Azure Functions to share the same Lease collection by using different prefixes.

Scenario: Use Azure Functions to send notifications about product updates to different recipients.

Trigger the execution of two Azure functions following every update to any document in the con-product container.

Reference:

https://docs.microsoft.com/en-us/azure/azure-functions/functions-bindings-cosmosdb-v2-trigger

Question 12:

Your company develops an application named App1 that uses the Azure Cosmos DB SDK and the Eventual consistency level.

App1 queries an Azure Cosmos DB for a NoSQL account named account1.

You need to identify which consistency level to assign to App1 to meet the following requirements:

Maximize the throughput of the queries generated by App1 without increasing the number of request units currently used by the queries.

Provide the highest consistency guarantees.

Which consistency level should you identify?

A. Strong

B. Bounded Staleness

C. Session

D. Consistent Prefix

Correct Answer: D

Reference: https://learn.microsoft.com/en-us/azure/cosmos-db/consistency-levels

Question 13:

You have an Azure Cosmos DB for a NoSQL account.

You need to create an Azure Monitor query that lists recent modifications to the regional failover policy.

Which Azure Monitor table should you query?

A. CDBPartitionKeyStatistics

B. CDBQueryRunTimeStatistics

C. CDBDataPlaneRequests

D. CDBControlPlaneRequests

Correct Answer: D

Question 14:

You have a database in an Azure Cosmos DB for a NoSQL account that is configured for multi-region writes.

You need to use the Azure Cosmos DB SDK to implement the conflict resolution policy for a container. The solution must ensure that any conflict is sent to the conflict feed.

Solution: You set ConfilictResolutionMode to Custom and you use the default settings for the policy.

Does this meet the goal?

A. Yes

B. No

Correct Answer: B

Setting ConflictResolutionMode to Custom and using the default settings for the policy will not ensure that conflicts are sent to the conflict feed. You need to define a custom stored procedure using the “conflictingItems” parameter to handle conflicts properly.

Question 15:

You have an Azure Cosmos DB for NoSQL account named account1 that has a single read-write region and one additional read region.

Account1 uses the strong default consistency level.

You have an application that uses the eventual consistency level when submitting requests to the account.

How will writes from the application be handled?

A. Writes will use a strong consistency level.

B. Azure Cosmos DB will reject writes from the application.

C. The write order is not guaranteed during replication.

D. Writes will use the eventual consistency level.

Correct Answer: A

This is because the write concern is mapped to the default consistency level configured on your Azure Cosmos DB account, which is strong in this case. Strong consistency ensures that every write operation is synchronously committed to every region associated with your Azure Cosmos DB account. The eventual consistency level that the application uses only applies to the read operations. Eventual consistency offers higher availability and better performance, but it does not guarantee the order or latency of the reads.

Pass4itSure’s latest DP-420 practice questions Q&As: 117

Stay tuned for the last updated DP-420 exam questions, we update the exam questions from time to time.

Achieve your dreams and have a successful career

Cloud-native applications have revolutionized the way businesses operate, providing scalability, flexibility, and cost-effectiveness. As more organizations adopt cloud-native architectures, the demand for professionals with Cosmos DB development skills is skyrocketing. Gaining expertise in Cosmos DB with the Microsoft DP-420 exam allows you to position yourself as a sought-after professional in the job market.

There are 532 Azure Cosmos DB jobs available on Indeed.com. For data engineers, software architects, Java developers, and more!

6figr.com website provides data: Azure Cosmos Db salary, with an average salary of Rs 2.23 lakh.

Glassdoor website data: In the United States, the average salary for an Azure developer is $141,798 per year.

It can be seen that it is feasible to get certified and get a high-paying job.

At last

I hope reading the full article will give you an idea of how to prepare for and pass the updated Microsoft DP-420 exam. Use Pass4itSure DP-420 practice questions https://www.pass4itsure.com/dp-420.html (PDF+VCE) to achieve your dreams, get a high salary, and have a wonderful career. It’s also important to note that exams are subject to change and so should the way you prepare, and you may need to modify your DP-420 exam preparation method based on the latest developments to get the most out of your efforts.

Good luck with the exams.